Got Data, but No AI? Why Your Data Architecture Might Be the Problem

Are you facing this too?

It’s more common than you think.

A company kicks off an AI project.

The budget’s approved. The latest tools are in place. A smart, capable data team is ready to roll.

Everyone’s excited—AI will predict problems, streamline decisions, and cut costs.

But a few months in… things start to stall.

The ideas looked great on paper—but in practice? Nothing really works.

So, what went wrong?

It’s not the people.

It’s not the tools.

It’s not the money.

The real roadblock? The data.

It’s messy. Disconnected. Spread across silos.

And that makes it nearly impossible for AI to deliver real results.

Having Data Isn’t the Same as Using Data

These days, most companies have plenty of data. Sales records, customer details, machine logs, website clicks the list goes on. But here’s the problem: that data often lives in different systems. The sales team can’t easily see what the operations team has. The finance data is stored separately. Different formats, different rules, no easy way to connect it all.

So, when you try to use AI, it struggles. It either can’t find what it needs, or it ends up working with bad, confusing data. And that means the insights it produces don’t help the business move forward.

Why Your Data Structure Matters So Much

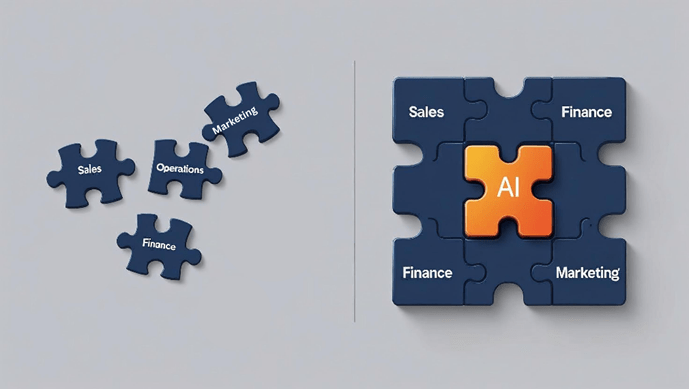

Think of your data like building blocks. If they don’t fit together properly, you can’t build anything strong no matter how advanced your AI tools are.

Here’s a simple comparison that shows what a weak setup looks like versus a strong one that’s ready for AI:

Weak Data Setup | Strong Data Setup |

Data stuck in silos, hard to combine | Data connected across teams and systems |

Outdated or messy data | Clean, consistent, high-quality data |

Slow, manual preparation | Automated data flows ready for AI |

Hard to get current info | Real-time data availability |

Risk of compliance issues | Strong governance and secure practices |

When your data is structured well, AI can deliver the insights, predictions, and automation you want.

The Hidden Costs of a Weak Data Setup

When companies don’t focus on fixing their data structure, they end up paying for it in other ways. Teams spend too much time just cleaning and organizing data instead of building AI models. The insights that come out of AI often aren’t useful because the data is messy. And delays pile up — months go by before the business sees any value from its AI efforts. All of this creates frustration across teams and wastes valuable time, energy, and budget.

What You Can Do

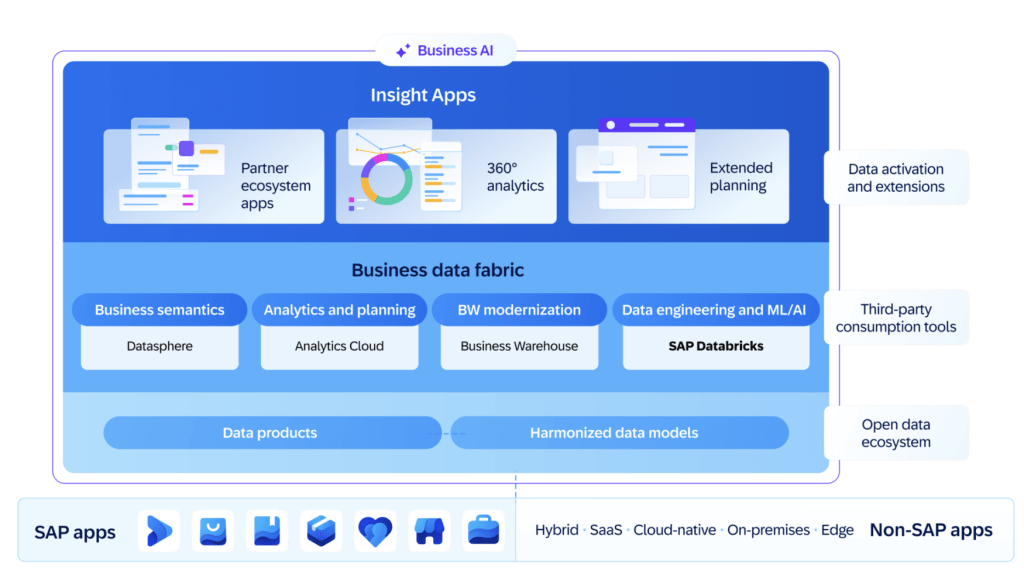

The good news is this can be fixed. The first step is to stop thinking of data as just an IT issue. Data is a core part of the business plan; it powers the decisions you want AI to help you make. That means planning your data setup with your AI goals in mind. Companies that succeed here often rethink how their data is connected, explore modern ways to manage and govern it, and make sure the data is accurate, safe, and up to date.

When the foundation is solid, AI can finally do what it’s supposed to help your business grow smarter, faster, and stronger.

The Big Takeaway

Having data is just the start.

If AI isn’t delivering the value you hoped for, take a closer look at how your data is structured. Fix that, and you’ll unlock the real power of AI.